Would you recognize it if you saw it?

A consensus in the field has been that UDL is not and cannot be a checklist. Nor is it a method. Nor is it the sum of the parts of the guidelines. Well than what is it? And how do we know UDL is being used? In this blog, I share information I presented at the 2017 UDL-IRN summit. I begin with a podcast (which is captioned!). For those who prefer to read, I also included this material in essay format below.

NOTE: This work was originally shared and published as a proceeding from my talk at the 2017 UDL-IRN Summit.

THE STRUGGLE TO OPERATIONALIZE UDL

As UDL has emerged in prominent legislative documents, UDL initiatives and research have received large grants, and UDL principles have been being utilized from preschool to higher education, it has done so in the notable absence of rigorous, scientific evidence of efficacy. There appear to be two contrary narratives occurring between scholarly publications and the reality of politics and practice.

On the one hand, scholarship has repeatedly decried the lack of clear operationalized definition of UDL (e.g. Basham & Gardner, 2010; Edyburn, 2010; Lowery, 2016; Okolo & Diedrich, 2015; Rao, Ok & Bryant, 2014) and some (e.g. Edyburn) have concern that without operationalization, UDL is not truly able to be researched at all.

On the other hand, UDL has continued to grow and expand in practice and policy in abject spite of the alleged lack of research-driven evidence to support it (Ralabate, et al., 2012). With the bountiful and increasing social, political, and economic attention that UDL has been enjoying for the past decade, this absence of rigorous research may seem unfathomable. This may be all the more true given the drive for evidence based practices to guide education in at least the last decade (e.g. the development of rigorous research standards for education; Odom et al., 2005). This lead me to two questions: (1) Why has UDL not received rigorous research to establish it as an evidence-based practice and (2), in absence of this data, how has UDL achieved the success that it has in our context?

One reason may be because there appears to be different perspectives on whether UDL has, in fact, been validated by research. For example, in contrast to Edyburn’s (2010) claims that “to date, there has been little research on UDL” (p. 34) and that UDL cannot be said to have been scientifically validated, the Center for Applied Special Technology (CAST, 2011) claimed in a webpage last updated in 2011 that UDL was founded on a decade of “modern research in the learning sciences: cognitive science, cognitive neuroscience, neuropsychology, neuroscience (para. 2). The site goes on to provide a long list of citations supporting each of the checkpoints of the UDL framework. In this sense, a dissection of UDL allows one to find substantial evidence to support any given part of UDL.

However, this is where the apparent disagreement is grounded. Inasmuch as UDL is a framework, which in practice is believed to be more than the sum of its parts (Lowery, 2016), then the research that supports all of the components of UDL, even taken altogether, cannot be assumed to be research that validates the framework of UDL. UDL is not, in other words, any one of the checkpoints, guidelines, or principle, nor any given combination of the said checkpoints or guidelines, but an intentional design using the three overarching principles and their subsumed guidelines and checkpoints (which are not seen as exhaustive) in a specific context to achieve specific goals.

The emphasis on the framework, which is made up of (but more than the sum of) its components creates a complex construct. One may look only at the 31 checkpoints in UDL, for example, to grasp just how complex and dynamic this construct can become. If one were to use any three of the checkpoints, a permutation calculation demonstrates that there are 4,495 possible combinations that one could be using. If one were to require that checkpoints be drawn from each principle, then there are still over 1,000 possible combinations. Of course, there are even more options as one could use four or six or seven or twenty checkpoints. One could argue that the results of a researcher examining the combination of checkpoints 4, 18, and 29 cannot be compared meaningfully to a different researcher in a different context exploring the effects of a combination of checkpoints 2, 6, 15, 17, and 28. Moreover, there are additional variables that matter to research, even if they have less overt significance for practice: how long are these checkpoints utilized? Are they permanent fixtures in the classroom or temporary interventions? And so forth. With the degree of mathematical and practical complication inherent to the application of the UDL checkpoints, it is clear that UDL cannot be treated as a method to be empirically tested with objectivity. Thus it may be accurately said that UDL is based on empirically validated elements, but UDL is not empirically validated. The very flexibility that provides UDL such wonderful strength from the practitioner perspective is its greatest weakness as a research construct.

This reality has caused some to be wary about UDL and its recent appearances in public policy. This may be because recent periods of research in education, since at least the 1980s, have brought the importance of research or evidence basis for education policy and approaches into focus (Cochran-Smith & Fries, 2005).

EXPANDING THE MEANING OF “EVIDENCE”

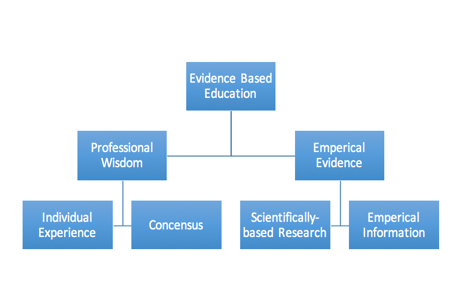

I suggest that there is an important point for distinction here between the terms “research-based” and “evidence-based” practices. These terms have been used somewhat interchangeably in the past (Davies, 1999; Hargreaves, 1996). However, In a 2003 presentation on evidence-based practice, then USDOE Assistant Secretary Educational Research and Improvement, Grover Whithurst, argued that evidence based practice could be parsed into the broad domains of “professional wisdom” and “empirical evidence,” with “scientifically-based research” being subsumed under the latter (Hood, 2003, p. 6; see figure 1). Whithurst argued for the need for both in education, suggesting on the one hand that professional wisdom allows for practices to “adapt to local circumstances” and “operate intelligently in the many areas in which research evidence is absent or incomplete” (p. 7), and on the other hand argued that empirical evidence was necessary to “resolve competing approaches,” “generate cumulative knowledge” and “avoid fad, fancy, and personal bias.” This articulation and parsing of evidence-based practices is helpful for examining the state of UDL as a practice. What the framework lacks in research-based evidence it may make up for in what Whithurst calls professional wisdom (consensus and experience of practitioners).

Figure 1. Different types of “evidence based education” according to Whithurst in Hood (2006).

From this latter perspective, it would appear in many case studies that UDL does, in fact, work. Repeated application in real-world settings have been met with success for stakeholders. The messiness of the real-world environment coupled with the flexibility of the framework that is UDL may have thus far precluded this circumstantial, but prolific, evidence from passing the rigor tests necessary to be inducted into the “what works clearinghouse,” but professional educators are finding that, even without scholars telling them whether UDL ought to work in their classrooms, it is working.

One may argue that as a field, we have pushed education into the realm of hard sciences as much as we have been able to, and for good and sensible reasons. But it is vital that we not lose touch with the purpose of our study. In education, our objective is not to make education more scientific, but to make it more effective. Science may have been means to this end, and it may have long been our most successful means, but it is hardly the only one.

Those who hold this line of argument may suggest that while we may not be able to clearly operationalize the independent variable of UDL, it is quite easy to pin down the dependent variables of student achievement in various ways. One may argue that if attempts to apply UDL, however vague or scattered, are met with greater access to learning for more students as evinced by assessment data, then UDL is leading to real progress in a field that has long struggled to achieve the ideal of true access for all.

After more than a decade of research, in seven years since Edyburn’s (2010) paper exposing the necessity urgency of operationalization, and after many passionate position papers, presentations, and research reviews, it behooves us to ask: can UDL itself be operationalized? Studied empirically? We still don’t know. But we know that despite this fact, the claims that UDL would pass out of utility if not for such operationalized definition were produced with haste are not panning out. UDL has continued in the past 10 years, the past five years, the past year, to grow and expand although we as a field have not solved the riddle of its operationalization.

NEEDED: A NEW PARADIGM

Nevertheless, the need for clarity and consensus in the research community as to how we will operationalize UDL will continue to nag. In many cases, it is necessary to prevent misunderstanding and misapplication in the practitioner community, as well (indeed, who can say what is a “misapplication” of UDL if we cannot say what an “application” looks like, definitively?) In this section, I will propose an idea to that considers the tumultuous history of attempts to operationalize UDL. This idea stems from the through: if we cannot fit UDL into our current definitions of operationalized constructs, then perhaps we can reshape our definition of operationalized constructs to fit with UDL. In other words, just as UDL calls for us to recognize that it is not a student who is disabled, but a learning environment that is disabling, so we must recognize that the definition of UDL is not limited, but rather it is our attempt to define UDL in conventional means that is limiting its potential.

A TERRITORIAL DEFINITION OF UDL

In my pursuit of a new way to conceptualize the operationalization of UDL, I felt it prudent to first establish premises to guide my conclusion.

First, I concur with Israel, Ribuffo, & Smith (2014) that for a lesson to be considered in alignment with UDL, it must go beyond providing physical or even content-based access to the child. Israel et al., use an analogy to articulate this, suggesting that “the traditional classroom accessibility efforts via automatic doors, automatic classroom lights, and wider entryways to accommodate wheelchairs; these solutions offer entry into the classroom but do not alter the content or instruction once students are there” (24). In this sense, developing and executing a UDL lesson must involve the intentional dissolution of barriers such that a student may access the room, the content, and the learning. This exceedingly broad boundary is insufficient as an operationalization of UDL, but nevertheless begins to hack away at what UDL is not (but is often confused to be; Edyburn, 2010), and thus forms a broad rule against which any operationalization can be measured.

Second, I believe that how we operationalize UDL is inherently connected to how we believe UDL ought to be taught to (preservice) teachers, and—by extension—how UDL ought to be used in PK-12 and higher education classrooms. For example, if we believe that for UDL to be used to teacher ninth graders about World War II must draw from all three principles (i.e. multiple means of representation, action and expression, and engagement), then operationalizing UDL as a constructed framework in general or in application to developing preservice teaches must also insist drawing from all three. Conversely, if researcher recognize that arbitrary rules such as “one must use at least two checkpoints from at least three guidelines in at each principle” is too rigid and impractical for dynamic classroom application (Lowry, 2016), then UDL ought not be so sharply operationalized at the conceptual level for research.

Third, I suggest that operationalizing UDL requires a subjectivity that is reflective of the fact that UDL is a framework, not a method. Any attempt to operationalize must recognize that the application of UDL is a complex, skill-based, subjective, decision-oriented form of pedagogy, the guiding principles for which will manifest uniquely depending on the context in which it is utilized. In other words, seeking to objectively operationalize UDL would thus be a disservice: what we need in research is qualitative description of the design process that teachers, researchers, or instructional designers used to implement the UDL framework in the specific context in which it was done; such description must be rich enough to demonstrate the core aspect of UDL, which–rather than being the U (universal), is perhaps better articulated as the D (design). In the realm of creative design, clear top-down objectivity and structure must give way to bottom-up post-positivism and post-modernist (post-)constructs. What UDL is and what it is not must be shaped by qualitative, intentional, creative, and contextual adherence to the framework.

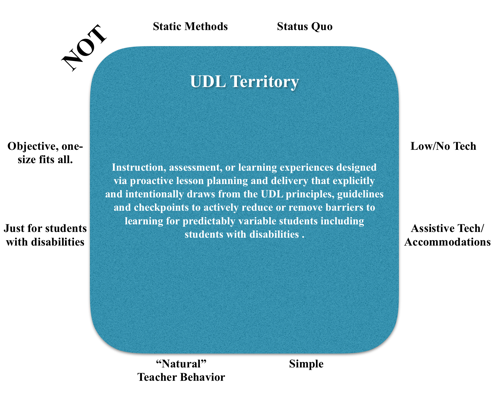

Figure 2. Operationalizing UDL as a “Territory”

In this light, Edyburn’s (2010) ten propositions were exactly what we need: broad exploration of where the tentative boundary lines are for implementing the framework UDL; however, I would suggest that this is not “definitional” in the traditional sense. Explicitly or implicitly, the majority of Edyburn’s propositions state what UDL is not. I paraphrase seven of the ten here: UDL is not really parallel to UD architecture (proposition #1); it is not just good teaching or what we have always done, but rather about dynamic design (proposition #4); it is not “natural” (proposition #5); it is not low tech/no tech (proposition #6); it is not assistive technology (proposition #7); it is not simply about primary impact/primary target (proposition #8); it is not simple (proposition #10). The remaining three, affirmative-natured propositions are either intentionally broad in concept (proposition #2: “UDL is fundamentally about proactively valuing diversity”; proposition #3: “UDL is ultimately about design”) or about evaluation of the construct, which is not definitional (proposition #9: “Claims of UDL must be evaluated on the basis of enhanced student performance.”). I posit that focusing on what UDL is not is the correct idea; this does not lead to a definition, but a territory (see figure 2) in which the definition of UDL is formed not through positive identification, but by broad understanding limited by clear boundaries.

A Potential Solution?

This territorial approach does not add new content to the ways the field has already described UDL; rather, it adapts what has been established in a new conceptualization.

I propose that allowing for a territorial operationalization of UDL gives educators and researchers alike the ability to maintain the flexibility that is inherent to the construct of UDL, while providing just enough details to create clarity whereby something could be deemed UDL or not based on a set of broad conceptual inclusion criteria and a clear and sharp set of exclusion criteria.

Given the nature of UDL as a framework calling for thoughtful, intentional design, much of what makes a practice consistent with UDL is going to be non-empirical thought processes. In order to operationalize UDL in future research, therefore, I propose that researchers (or the teachers involved in the study) must explicate their context and thought process as to how and why they made the instructional and/or assessment and/or environmental design decisions that they did. While it is certain that not everyone will agree with the design choices that researchers or practitioners may make, the salient point in establishing the practice of UDL is that the researcher/practitioner is, in fact, consciously designing and using the framework of UDL in context. For example, in the methods section of research papers, future researchers could provide qualitative description of the objectives, predicted barriers, and reasons for applying the UDL checkpoints for the context in which learning was to occur during the observed period.

So doing would have two major benefits: (1) It would provide a semi-objective measure by which the operationalization of UDL could be established (i.e. did the author demonstrate sufficient evidence of intentional design in context?) and (2) it would provide exemplification of the thought process necessary to actualizing UDL in a context, which others may find useful for transferring to their own context(s).

REFERENCES

Basham, J., & Gardner, J. (2010). Measuring universal design for learning. Special Education Technology Practice, 12(2), 15–19.

CAST (2011). UDL Guidelines – Version 2.0: Research Evidence. In National Center on Universal Design for Learning. Retrieved from http://www.udlcenter.org/research/researchevidence

Cochran-Smith, M., & Fries, K. (2005). Researching teacher education in changing times: Politics and paradigms. Studying Teacher Education: The Report of the AERA Panel on Research and Teacher Education, 69–109.

Davies, P. (1999). What Is Evidence-Based Education? British Journal of Educational Studies, 47(2), 108–121.

Edyburn, D. L. (2010). Would You Recognize Universal Design for Learning If You Saw It? Ten Propositions for New Directions for the Second Decade of Udl. Learning Disability Quarterly, 33(1), 33–41.

Hargreaves, D. H. (1996). Teaching as a research-based profession: possibilities and prospects. Teacher Training Agency London.

Hood, P. D. (2003). Scientific research and evidence-based practice. San Francisco: WestEd. Retrieved from http://www.wested.org/online_pubs/scientrific.research.pdf

Israel, M., Ribuffo, C., & Smith, S. J. (2014). Universal design for learning innovation configuration: Recommendations for preservice teacher preparation and inservice professional development. Retrieved from https://kuscholarworks.ku.edu/handle/1808/18509

Lowery, K.A. (2016). If Up is Down and Down is Up, What the Up is UDL? In Garnder, J.E; Hardin, D. Networking, Implementing, Research, and Scaling Universal Design for Learning. Proceedings from the 3rd Annual UDL-IRN Summit, Towson, MD (pp. 71-76).

Odom, S., Brantlinger, E., Gersten, R., Horner, R., Thompson, B., & Harris, K. (2005). Research in Special Education: Scientific Methods and Evidence-Based Practices. Exceptional Children, 71(2), 137–148.

Okolo, C. & Diedrich, J. (2015, April). Universal design for learning in the professional literature. Presented at the Council for Exceptional Children 2015 Special Education Convention and Expo, San Diego, CA.

Ralabate, P., Hehir, T., Dodd, E., Grindal, T., Vue, G., Eidelman, H., & Carlisle, A. (2012). Universal design for learning (UDL): Initiatives on the move: Understanding the impact of the race to the top and ARRA funding on the promotion of universal design for learning.

Rao, K., Ok, M. W., & Bryant, B. R. (2014). A Review of Research on Universal Design Educational Models. Remedial and Special Education, 35(3), 153–166. https://doi.org/10.1177/0741932513518980